Reinforcement learning (RL) is about training an agent to take actions that maximise long-term reward. Many modern RL successes—from game-playing agents to robotics controllers—depend on policy gradient methods, which directly optimise a policy (a function that maps states to actions). However, classic policy gradient approaches can be unstable. A single large update to the policy can drastically change behaviour, break what the agent has learned, and cause performance to collapse. Proximal Policy Optimization (PPO) was designed to reduce that risk by preventing large, destructive policy updates while keeping training efficient and practical. For learners exploring RL concepts through a data scientist course in Chennai, PPO is a strong example of how careful optimisation design can make advanced models train reliably.

Why Policy Gradient Updates Become Unstable

Policy gradient methods update policy parameters using gradients estimated from sampled trajectories. In principle, this is straightforward: if an action led to higher reward than expected, increase its probability; if it performed worse, reduce its probability. The issue is that gradient estimates can be noisy and sensitive to hyperparameters like learning rate and batch size.

If the policy changes too much in one update, the agent may stop visiting useful states it previously relied on. This is especially common when working with complex neural network policies. Large steps can also create feedback loops: the agent’s data distribution changes after each update, which can make the next gradient estimate less reliable. In real projects, this instability looks like sudden drops in reward curves, oscillations, or training that works once and fails the next run. A well-structured data scientist course in Chennai will often highlight PPO because it addresses these issues directly without making the algorithm overly complex.

The Core Idea Behind PPO

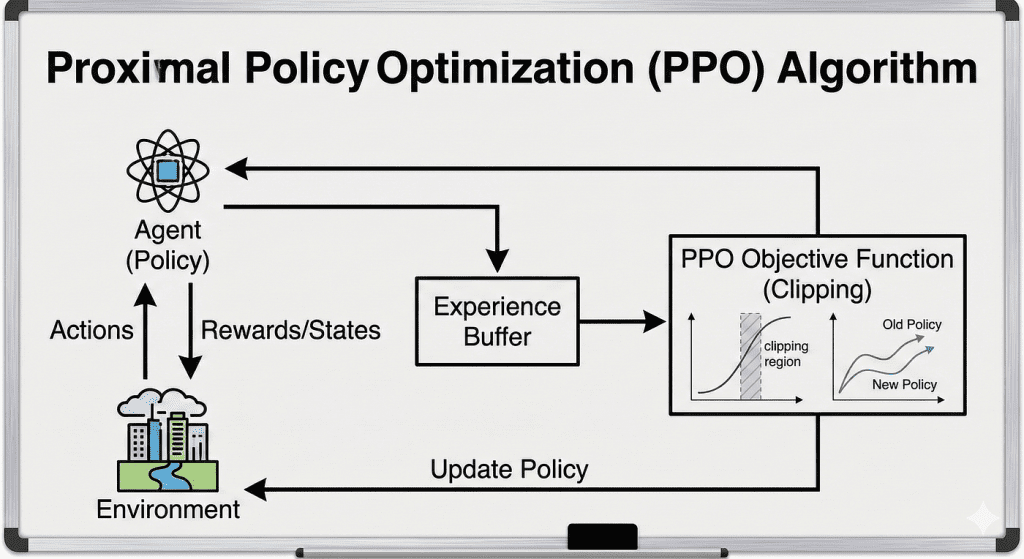

PPO builds on an earlier concept: instead of blindly trusting the gradient step, we restrict how far the policy is allowed to move at each update. The key is to compare the new policy to the old one using a probability ratio:

- ratio = (probability of action under new policy) / (probability of action under old policy)

If this ratio becomes too large or too small, the new policy is deviating sharply from the old policy. PPO’s objective function “clips” this ratio so the optimiser does not get rewarded for pushing it beyond a safe range.

In simple terms: PPO still improves the policy, but it refuses to accept updates that are too aggressive. This makes learning more stable while staying computationally simpler than more complex constrained-optimisation approaches.

How the Clipping Mechanism Prevents Destructive Updates

PPO typically uses a clipped surrogate objective. You can think of it like a safety guardrail:

- If the new policy improves an action’s probability in a helpful direction, PPO allows it—up to a limit.

- If the optimiser tries to increase it too much, PPO stops giving additional benefit for that change.

- The same happens if it tries to decrease probabilities too aggressively.

This clipping reduces the chance that a single batch of lucky (or unlucky) trajectories causes an oversized update. PPO is also commonly paired with an “advantage” signal, which measures how much better an action performed compared to a baseline. That baseline is often produced by a value function, creating an actor–critic style approach: the actor updates the policy, while the critic estimates state values to reduce variance in learning.

Because PPO supports multiple training epochs over the same batch of data (while still controlling policy drift), it achieves a useful balance: better sample efficiency than naive policy gradients, with much better stability. This stability is one reason PPO frequently appears in practical RL implementations and course curricula, including a data scientist course in Chennai that includes reinforcement learning modules.

Practical Workflow: Where PPO Fits in a Real Pipeline

A typical PPO training loop looks like this:

- Collect trajectories using the current policy (states, actions, rewards).

- Compute returns and advantages (often using Generalised Advantage Estimation for smoother learning).

- Update the value function to better predict returns.

- Update the policy using the PPO clipped objective, usually across a few epochs.

- Repeat until performance converges.

In real-world tasks, PPO has been used for continuous control (robot arms, locomotion), discrete decision problems (resource allocation, strategy games), and even preference-based fine-tuning setups where a policy must improve without drifting too far from prior behaviour. Understanding this workflow helps learners connect RL theory to engineering practice—something emphasised in many applied learning tracks like a data scientist course in Chennai.

Common Implementation Tips and Pitfalls

PPO is robust, but not magic. Stability still depends on sensible choices:

- Clipping range: Too tight can slow learning; too loose can reintroduce instability.

- Batch size and rollout length: More data per update often reduces noise.

- Normalising advantages: Commonly improves training consistency.

- Entropy bonus: Encourages exploration and helps avoid premature convergence.

- Value function balance: If the critic is poor, the advantage estimates become unreliable.

A practical approach is to start from standard defaults, monitor learning curves, and change one parameter at a time. This disciplined tuning mindset is a transferable skill, whether you are optimising RL agents or more traditional machine learning systems.

Conclusion

Proximal Policy Optimization (PPO) is a widely used policy gradient method because it tackles a core RL problem: unstable learning caused by overly large policy updates. By clipping the policy probability ratio, PPO introduces a simple but effective constraint that keeps updates “proximal” to the previous policy, reducing destructive behaviour shifts. Its blend of stability, simplicity, and strong empirical results makes it a popular baseline across RL research and production experiments. If you are learning reinforcement learning through a data scientist course in Chennai, PPO is a valuable algorithm to study—not only for what it achieves, but for the broader lesson it teaches: smarter optimization often matters as much as model complexity.